flowchart LR

S3[(S3 Bucket)] --> PL[Preprocessing Lambda]

PL --> Pinecone[(Pinecone)]

Pinecone --> QL[Query Lambda]

QL --> User([User])

classDef aws fill:#FF9900,stroke:#232F3E,color:white;

classDef external fill:#6CB4EE,stroke:#1A73E8,color:white;

classDef user fill:#E8E8E8,stroke:#666666,color:black;

class S3,PL,QL aws;

class Pinecone external;

class User user;

Introduction

Frequently, we want the ability to efficiently search and retrieve relevant information based on meaning rather than just keywords. This is a common pattern in LLM-based applications, where the application needs to retrieve semantically related content to a user’s input and add it to the context window1.

In this post, I’ll walk through the high-level setup of a the backend for a semantic search stack using Pinecone, AWS, and SST. I’ll test this on a recipes data set.

Vector Search Overview

We need a tractable way to search for documents2 by semantic meaning rather than just matching keywords. For example, a search for “automobile” might return results about “cars” and “vehicles” even if they never use the exact word “automobile”.

The key intuition behind vector search comes from the “distributional hypothesis” in linguistics: words that appear in similar contexts tend to have similar meanings. Thus:

- If two pieces of information are semantically similar, they should appear in similar contexts.

- If information appears in similar contexts, the vector representations should be close together in the embedding space. We can measure the “closeness” of two vectors by specifying some measure of similarity. The most common method is cosine similarity, which is more or less the dot product of the two vectors.

- Therefore, we can find semantically related content by looking for nearby vectors.

So we need:

- A way to transform a document into a vector (an embedding).

- A way to run a nearest neighbors algorithm on a set of vectors to find similar ones.

Luckily for us, most of the tools to do this already exist.

High Level Architecture

Let’s look at the high-level architecture first.

The diagram illustrates our serverless search architecture. Raw data is stored in an S3 bucket, which is processed by our Preprocessing Lambda function. This function generates embeddings and stores them in Pinecone. When a user makes a query, the Query Lambda embeds their query, then uses that to retrieve relevant documents from Pinecone and return them to the user.

Amazon Web Services

We’ll common AWS services to build our simple service.

- S3: We’ll use S3 to store raw data files.

- Lambda: We’ll use Lambda for compute.

- Parameter Store: We’ll use Parameter Store to store API keys.

That’s it for now. If we want to store additional metadata about our documents, a common pattern is to use DynamoDB, but this is a PoC so we’ll just store our metadata in PineCone directly.

Serverless

We’ll use a serverless design so that we only have to manage code. We’ll build a few simple libraries for data cleaning, processing, and running queries, then deploy them to AWS Lambda. AWS Lambda is an event-driven compute service designed to manage compute resources for you. Per unit compute it’s more expensive than renting servers, but (a) serverless let’s us only pay for what we use, and (b) AWS has a generous free tier, so we can build a simple service without too much time, complexity, or expense.

Pinecone

Pinecone is a production-scale serverless vector search database. It will handle all of the vector storage and retrieval logic for us, so we will just need to pick the embedding scheme and the distance metrics. Pinecone offers a nice API and Python SDK, so we can manipulate and query our Pinecone instance from Lambda.

As per usual for this blog, my goal is simply to tinker with a new technology, so I haven’t thought too hard about the production tradeoffs of Pinecone relative to competitors.

I’ll rent a Pinecone instance directly through AWS Marketplace. Once again, we should be well-within the free tier.

Infrastructure as Code

Infrastructure as Code (IaC) is a way to provision and manage infrastructure using machine-readable instructions rather than manual processes (like the AWS Console). This lets you version control infrastructure configurations, automate deployment processes, ensure consistency, etc. You write code to define any of the infrastructure you need, then use automation tools to deploy and manage that infrastructure.

Popular IaC tools include Terraform, AWS CloudFormation, Azure Resource Manager templates, and Pulumi.

SST

SST is a framework that makes it easier to build serverless applications on AWS. It provides some nice high-level cosntructs for deploying infra to AWS. I’ll use SST3 to manage the infra for this project.

Code

First, let’s define an S3 bucket and two Lambdas.

import { Bucket, Function } from "sst/constructs";

export function SearchStack({ stack, app }) {

const rawSearchFiles = new Bucket(

stack,

"BUCKET_NAME_GOES_HERE",

{ cors: [{

maxAge: "1 day",

allowedOrigins: ["*"],

allowedHeaders: ["*"],

allowedMethods: ["GET", "PUT", "POST", "DELETE", "HEAD"],

},

],

},

);

const preprocessRawDataLambda =

new Function(stack, "preprocessRawData", {

handler: "src/functions/lambda_preprocessing.preprocessRawData"

});

const queryRecipeLambda =

new Function(stack, "queryRecipeLambda", {

handler: "src/functions/lambda_query.queryRecipe"

});

preprocessRawDataLambda.attachPermissions(["ssm", "s3"]);

queryRecipeLambda.attachPermissions(["ssm", "s3"]);

return {

rawSearchFiles,

preprocessRawDataLambda,

queryRecipeLambda

}

}The first Bucket construct creates a S3 bucket with CORS configuration for storing raw search files. The next two Function constructs creates two Lambda functions: one for preprocessing raw data and another for querying recipes. Both Lambda functions are granted permissions to access AWS Systems Manager (SSM) and S3 services (for a production use case you can might want to make these more granular). Note that S3 has a global namespace, so you need a unique name for your bucket.

import { SSTConfig } from "sst";

import { SearchStack } from "./stacks/SearchStack";

export default {

config(input) {

return {

name: "searchInfra",

region: "us-east-1",

profile: "search"

};

},

stacks(app) {

app.setDefaultFunctionProps({

runtime: "python3.9",

timeout: 60,

enableLiveDev: true

});

app

.stack(SearchStack);

},

} satisfies SSTConfig;The second file is for configuration of the overall SST application. It sets default parameters, like application name, region, AWS profile. It also sets default properties for all Lambda functions in the application: Python 3.9 runtime, 60-second timeout, and enabled live development mode.

Finally, it includes the SearchStack as part of the application.

If we have our AWS account set up properly4 and run the appropriate commands (like npx sst dev) this will deploy our application infrastructure to AWS. You can also hook up sst to your CI/CD tool of choice to automate deployments.

Data Cleaning

Next, we need some documents to run search over.

Data Set

I pulled the Recipe NLG dataset from Kaggle, then I wrote a script to chunk the data into jsonl files, each with roughly 1000 recipes, which I then uploaded to S35.

Code

We’ll need an interface to retrieve the data from S3. The following RawDataInterface class has a method that grabs a given bucket and key and loads each line as a document.

class RawDataInterface:

def __init__(self):

self.s3 = boto3.client('s3')

def getJsonlFromS3(self, bucket, key):

response = self.s3.get_object(Bucket=bucket, Key=key)

content = response['Body'].read().decode('utf-8')

documents = []

for line in content.strip().split('\n'):

if line:

documents.append(json.loads(line))

return documentsNext, we need a way to reformat the data so that we can load it into Pinecone. Let’s make a new class to store those methods.

class DataCleaner:

def __init__(self):

pass

def cleanRecipeData(self, recipes):

processedRecipes = []

for i, row in enumerate(recipes):

ingredients = row['ingredients']

directions = row['directions']

recipeText = f"""Recipe: {row['title']}

Ingredients: {' '.join(ingredients)}

Directions: {' '.join(directions)}"""

metadata = {

'title': row['title'],

'ingredients': ingredients,

'directions': directions,

'source': row['source'],

'link': row['link']

}

document = {

'id': f"recipe_{i}",

'text': recipeText,

'metadata': metadata

}

processedRecipes.append(document)

return processedRecipesThe cleanDataRecipe method takes a list of recipes, and produces two components:

- A stringified dictionary of the ingredients and recipes (to be embedded)

- The metadata for the recipe

Then it returns the list of processed recipes.

We’ll need to add the processed recipes to Pinecone. Let’s add a class to interface with Pinecone:

class PineconeInterface:

def __init__(self):

pineconeKey = ParameterStore().getParameter('PineconeKey')

self.pinecone = Pinecone(api_key=pineconeKey)

def getIndex(self, indexName):

return self.pinecone.Index(indexName)

def getIndexForTraining(self, indexName, dimension=1536, metric="cosine"):

existingIndexes = [index.name for index in self.pinecone.list_indexes()]

if indexName not in existingIndexes:

spec = ServerlessSpec(

cloud="aws",

region="us-east-1"

)

self.pinecone.create_index(

name=indexName,

dimension=dimension, # OpenAI's embedding dimension

metric=metric,

spec=spec

)

print(f"Created new index: {indexName}")

else:

print(f"Index {indexName} already exists")

return self.pinecone.Index(indexName)

def prepareDocumentsForPinecone(self, documents, embeddingModel, batchSize=100):

embeddingBatches = []

for i in range(0, len(documents), batchSize):

batch = [doc['text'] for doc in documents[i:i + batchSize]]

embeddings = embeddingModel(batch)

for j, embedding in enumerate(embeddings):

docIdx = i + j

record = {

'id': f"recipe_{docIdx}",

'values': list(embedding),

'metadata': documents[docIdx]['metadata']

}

embeddingBatches.append(record)

return embeddingBatches

def loadToPinecone(self, index, embeddedBatch, batchSize=100):

for i in range(0, len(embeddedBatch), batchSize):

batch = embeddedBatch[i:i + batchSize]

try:

index.upsert(vectors=batch)

print(f"Successfully uploaded batch {i//batchSize + 1}")

except Exception as e:

print(f"Error uploading batch {i//batchSize + 1}: {str(e)}")

raise eWe’ve crafted four methods:

getIndexsimply retrieves an existing Pinecone index.getIndexForTrainingeither retrieves an existing index or creates a new one if it doesn’t exist, with parameters for dimension (defaulting to 1536, which matches OpenAI’s embedding size) and similarity metric (defaulting to cosine similarity)prepareDocumentsForPineconetakes documents, converts their text to embeddings using the provided embedding model, and formats them as records with IDs, embedding vectors, and metadataloadToPineconeuploads batches of embedded documents to Pinecone, with error handling to report any issues

We still need an actual embedding model, though. We’ll use OpenAI’s ada-002 model

class OpenAIClient:

def __init__(self):

self.ps = ParameterStore()

self.apiKey = self.ps.getParameter('openAISearchSecretApiKey')

self.client = OpenAI(api_key=self.apiKey)

def generateEmbedding(self, text, model="text-embedding-ada-002"):

return self.generateEmbeddings([text], model=model)[0]

def generateEmbeddings(self, texts, batchSize=100, model="text-embedding-3-small", maxRetries=3):

allEmbeddings = []

for i in range(0, len(texts), batchSize):

batch = texts[i:i + batchSize]

response = self._processEmbeddingBatch(batch, model, maxRetries)

if response:

sortedEmbeddings = sorted(response.data, key=lambda x: x.index)

allEmbeddings.extend([e.embedding for e in sortedEmbeddings])

return allEmbeddings

def _processEmbeddingBatch(self, batch, model, maxRetries):

for _ in range(maxRetries):

try:

return self.client.embeddings.create(model=model, input=batch)

except Exception as e:

print(f"Embedding error: {e}")

sleep(1)The class sets up everything needed to talk to OpenAI’s services. When initialized, it pulls an API key from a parameter store (for security) and creates an OpenAI client. There are two main methods for generating embeddings:

generateEmbeddinghandles a single text input and returns one embedding.generateEmbeddingshandles multiple texts, processing them in batches of 100 to avoid overwhelming the API.

Now that we can clean data and embed the documents, we can finally construct the entrypoint for our Lambda.

def preprocessRawData(event, context):

bucket = "prod-searchinfra-searchst-quantinsitesrawsearchfil-5wlmp0higomw"

key = "recipes/chunk_0000.jsonl"

rdi = RawDataInterface()

documents = rdi.getJsonlFromS3(bucket, key)

cleanedDocuments = DataCleaner().cleanRecipeData(documents)

pineconeInterface = PineconeInterface()

embeddingModel = lambda x : OpenAIClient().generateEmbeddings(x)

embeddedBatch = pineconeInterface.prepareDocumentsForPinecone(cleanedDocuments, embeddingModel)

recipeIndex = pineconeInterface.getIndexForTraining("recipe-index")

pineconeInterface.loadToPinecone(recipeIndex, embeddedBatch)Note that this connects back to our sst code: if we trigger our preprocessRawData Lambda, this code should run. Using our library, it grabs a single chunk of data from S36, cleans the recipe data, embeds it, and loads the data to Pinecone.

Queries

Our data should be indexed in Pinecone, ready to query. We just need to write the code to perform the queries.

Code

Let’s extend our PineconeInterface class to handle queries to Pinecone.

class PineconeInterface:

...

def queryPinecone(self, index, queryEmbedding, topK=5):

try:

results = index.query(

vector=queryEmbedding,

top_k=topK,

include_metadata=True

)

return results

except Exception as e:

print(f"Query failed: {e}")

return NoneWe hand the method an index and embedded query and return the top 5 most similar entries in the index.

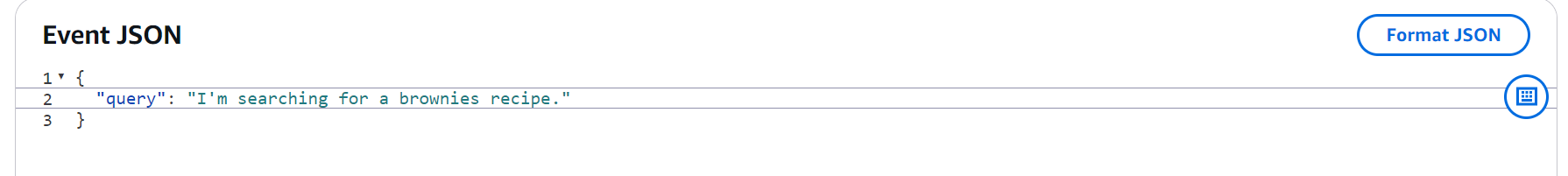

Let’s look at the entrypoint:

def queryRecipe(event, context):

query = event["query"]

embeddedQuery = OpenAIClient().generateEmbedding(query)

pineconeInterface = PineconeInterface()

recipeIndex = pineconeInterface.getIndex("recipe-index")

pineconeResponse = pineconeInterface.queryPinecone(recipeIndex, embeddedQuery).to_str()

return {"statusCode": 200, "body": pineconeResponse}Example

Once deployed, we can test our query Lambda in production7:

Here’s what the top output looks like:

{'matches': [{'id': 'recipe_340',

'metadata': {'directions': ['Mix all ingredients together; add '

'nuts.',

'Pour into a greased and floured '

'cookie sheet.',

'Bake only 23 minutes in a 350° '

'oven. Cool and frost.'],

'ingredients': ['1 1/2 c. white sugar',

'1 1/2 c. brown sugar',

'4 Tbsp. cocoa',

'2 c. flour',

'6 eggs',

'1 1/2 sticks oleo, melted',

'1/2 c. milk',

'1 tsp. vanilla',

'1/4 tsp. salt',

'1/2 c. nuts'],

'link': 'www.cookbooks.com/Recipe-Details.aspx?id=934472',

'source': 'Gathered',

'title': 'Brownies'},

'score': 0.652191401,

'values': []},

...Summary

We’ve built a complete semantic search backend using AWS, Pinecone, and SST. This approach gives you a powerful, scalable foundation for semantic search that can handle a variety of use cases beyond just recipes. The serverless architecture means you only pay for what you use, making it cost-effective even for small projects or experiments.

Next Steps

At this point, we have a vector database running in AWS. AWS is a powerful tool, and with some elbow grease this could be used as part of a production application. For example, you could use sst to deploy the Lambda functions behind API Gateway, build a front-end, etc. Be careful if you do this: AWS can be dangerous if you don’t know what you’re doing. But if that’s your goal, you’re welcome to figure it out.

That’s not my plan, however. I will hopefully be using this stack for some experiments. Stay tuned!

Footnotes

This is commonly referred to as retrieval-augmented generation (RAG).↩︎

The specific unit will depend on the use case. It could be a sentence, a document, a picture, etc. I’ll call these “documents” for simplicity.↩︎

I’ll use SSTv2 for this project, since at the time of writing SSTv3 doesn’t support Python Lambda environments. I’m also on

openai==1.61.0andpinecone==6.0.1, in case you’re following along.↩︎Left as an exercise to the reader.↩︎

I had Claude write this one.↩︎

It should be straightforward to upload more data.↩︎

To trigger the Lambda manually, I’m adding the expected input to the console and hitting the “test” button.↩︎