Introduction

In a previous essay I considered the problems associated with generating novels. With the introduction of the new Nano-Banana Pro, let’s revisit those same basic information bottleneck argument with respect to pictures. All arguments are back-of-the-envelope.

Count the Bits

Consider the map:

\[ F: \text{Text} \to \text{Pictures} \]

\(F\) is Nano-Banana (or any other generative model that produces pictures). You feed it a sequence of words and out pops a picture1.

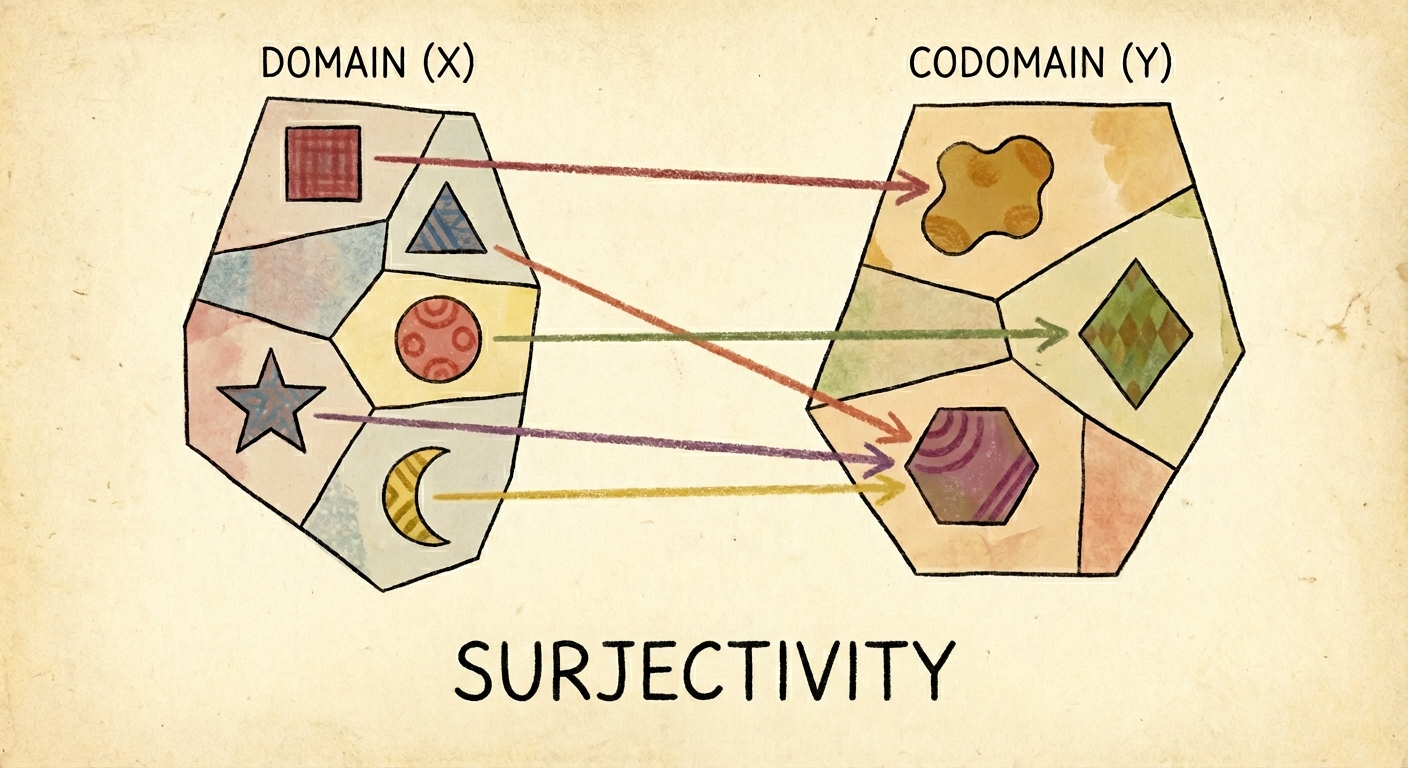

Let’s suppose for simplicity that \(F\) is a function (so a given input produces just one deterministic output) and that \(F\) is surjective2 (for a given picture, there is at least one “text” that maps to it).

How many possible pictures are there?

Let’s assume pictures are 2048 x 2048 pixels3. Then there are \(4194304\) total pixels. At 24 bits/pixel, we have \(100663296\) bits in a picture.

If the map was a surjective function, the number of inputs must be at least as large as the number of possible outputs. In order to specify a particular pixel array uniquely, we need to come up with a set of words that point to it. If we assume a generous 15 bits per word4, and an input must be at least \(100663296\) bits, we therefore need at least \(6710886\) words, or roughly \(13000\) single-spaced pages of text, to exactly specify one image.

Natural Image Manifold

Obviously, that conclusion is absurd. It’s not actually that hard to generate roughly what you want in Nano-Banana Pro. For example, the picture above I generated with 11 words. The result was reasonable, and close enough to my intent that I included it here.

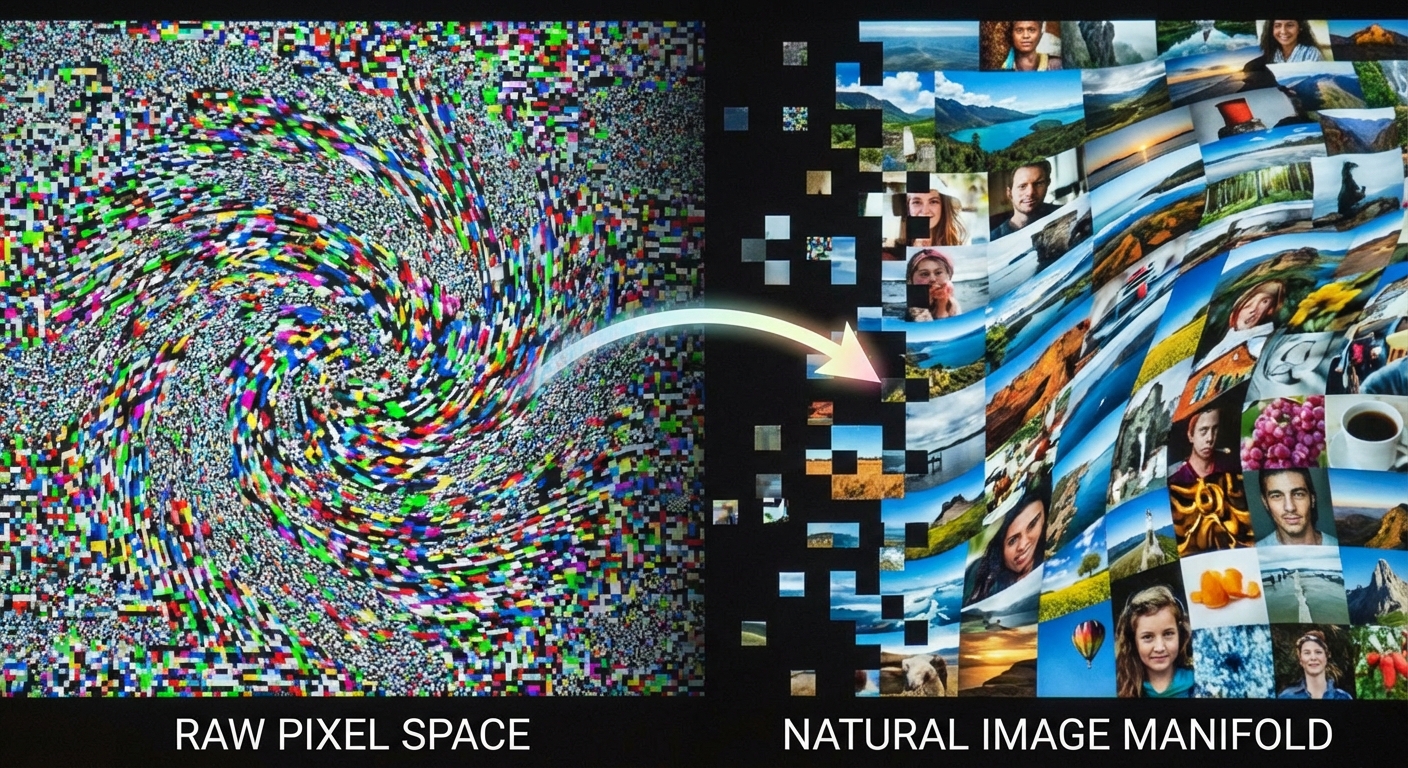

Most random arrangements of pixels look like static noise. The ‘natural image manifold’ is the (very small) subsection of pixel space containing images recognizable (or at least, of interest) to humans. And the map from text to images is not actually surjective.

How big is the natural image manifold?

State-of-the-art codecs can get 0.3–0.7 bpp before noticeable artifacts show up5. Let’s take the middle value. Our 2048 by 2048 image has \(4194304\) pixels. At 0.5 bpp, that’s approximately \(2097152\) bits of perceptually relevant information. That’s around 140000 words, or 280 pages.

So we might say that a picture is worth 140000 words.

Semantic Images

In practice, usually a human is looking for an image drawn from a rough equivalence class of images rather than a particular set of pixels.

A prompt like “a robot on a bench” is a whole region of the natural image manifold, which contains millions of possible pictures. You can ask:

- which robot?

- what exact pose?

- surrounded by which tree species?

- where is the sun?

- what’s the weather?

- how high is the camera?

- is it a 35mm lens? an 85mm lens?

- are there spiderwebs? broken branches?

People care about object identity, relations, scene type, lighting category, viewpoint class, mood, style, etc. How many bits of semantic control over images does a user really need? And given a piece of text, most of the text is redundant in terms of semantic bits. The same choices are reinforced: which objects are present, the style, the lighting, etc.

A toy upper bound might be 50 bits. That’s roughly \(10^{15}\) possible categories. And a 50 bit password is already very secure6. That’s why prompting can work as well as it does. The gap is filled with non-semantic visual content: whatever the user takes for granted.

So is a picture worth a thousand words? It depends on the picture, the words, and what the viewer cares about.

Art Golf

Consider the following game (“Art Golf”). Take an image or piece of art (especially an abstract image, or unknown work). Without giving the name of the artist, the name of the piece of art, or the name of a particular artistic movement, try to prompt the generative model to produce the original piece of art using only text. Fewer words is better.

Final Thoughts

If you use a prompt of 10 to 50 words (150 to 700 “bits on disk”), and a natural 2K image is 2.5 million bits, then the model must be filling in 99.9%+ of the visual detail. You can call this “hallucinating”, or “inference”: at the end of the day the human is making a small number of decisions to determine a much larger object.

I don’t think making the model bigger can “fix” this. We might better approximate the natural image distribution (needing fewer words to specify an image), but at the end of the day there’s no way to produce a uniquely specified image unless the prompt contains enough bits to select that image. The human must specify all the necessary bits to identify the required image.

This isn’t limited to AI, but applies in general to all principals and agents. If you commission an artwork or design, you commission a specification and the artist figures out the details. Outsourcing to an external party is only valuable when you don’t want to specify every bit.

While they are both considered “AI”, the problem of intent seems fundamentally different than the problem of converting between words and text.

AI Disclosure

All of the images in this post were made using Nano-Banana Pro.

Footnotes

In theory you can also feed a generative model a picture, or information in some other form. You could add sketches, CAD models, structured scene graphs, reference images, etc. But while this does make things much more efficient, we ultimately run into the paradox where we are specifying the image to specify the image.↩︎

Probably not actually realistic. Most images will appear to be noise and will not be determinable in words. This is why we get such vast numbers: you’d have to specify each pixel one-by-one. Note also that we don’t ask for injectivity (one-to-one). If the function was both injective and surjective it would be invertible (we could invert it to take pictures to text). Lack of injectivity allows more that one prompt to map to a single image.↩︎

I looked for the technical specs and didn’t immediately find them. Seems like 2048 by 2048 at 2k resolution. This is an approximation; I don’t think it should affect the argument.↩︎

Classic results by Claude Shannon put an English word somewhere between 10-15 bits. See the bottom of the section here.↩︎

If we did have an injective and invertible Nano-Banana, we could store an image as text and encode/decode it with the model. I would be very interested to see if some variation of Nano-Banana or other Neural Image Compression frameworks can reliably compress images better than a modern codecs, without visual artifacts.↩︎

See here. ~50 bits of “real” entropy is enough for a reasonable password. We could extend the number of semantic bits by more without affecting the argument.↩︎